Today's telecom network are generating huge data volumes

(in petabytes) and it is growing exponentially overtime.The

data can be structured and unstructured in nature. This data is containing

wealth of information and a timely analysis against this data can reveal wealth

of information, that can be used for improved customer satisfaction, better business

plans and roll out of new products to target individual customer needs.

Most of the operators make the business and

operational decision by analyzing the data manually and in offline mode, which

can’t provide better services, offerings to users in real time. Offline

analysis of big data increases the response time, which puts pressure on the

profitability of business in the competitive market.At the same lot of

technology investment is done in back end infrastructure to support the offline

analysis. Increased cost of infrastructure and lost opportunities because of

offline analysis of data is one of the biggest challenges for the telecom

operators that need to be addressed.

The data flowing through telecom networks resides in

different systems, applications, databases e.g. billing, inventory, network

management systems, network element and other applications.In a typical setup,

offline ETL processes aggregates the data from various sources and stores it

into traditional BI system for further analysis by operators. E.g. determine

the network usage and performance, user experiences etc., which impacts the profitability

of the business.

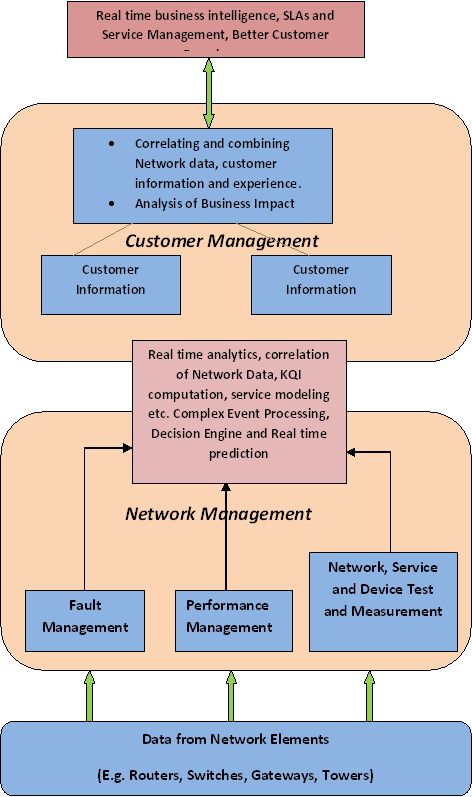

The solution of the above problem is real time

analytics, which would help operators to correlate data, taking action and

decision in real time. It will help operators to improve their businesses,

networks, better customer experience and make it more profitable. Operators

today are looking for this kind of solution, which analyze the discrete,

unstructured and huge data in real time and enable them to optimize the

networks, taking actions in real time, provide better services to their high

value customers.

As data volume is growing, Hadoop is becoming the

frameworks of choice for ad-hoc massive scale offline batch analytic. However

the serious limitation of Hadoop is that it cannot be used to make the real

time decision. HFlame, a product from Data Advent (www.hflame.com)

breaks this limitation of Hadoop. HFlame transforms the customer’s existing

Hadoop infrastructure into real time data analysis infrastructure. With HFlame,

Hadoop platforms are capable of running continuous analysis on data streams

stored inside Hadoop file system (HDFS). HFlame uniquely empowers enterprise to

run real time big data analytics without introducing any additional technology

component (e.g. data storage or data processing APIs). With HFlame, same Hadoop

platform can meet both offline and online data analytics needs of enterprise

and deliver instant insights without needing any alternate technologies.

Because of HFlame’s nature to analyze the data at real

time by running Map-Reduce jobs continuously, the resource usage is maximized

and up to 10x smaller Hadoop cluster can produce the same analysis results in

same/lesser amount of time needed by a much larger traditional Hadoop cluster. HFlame cuts down traditional hadoop batch analytics processing

time by more than 95%. Analytics that would otherwise take hours to finish, can

report results in less than a minute.

This post will follow with a hands on example soon.

Check

out www.hflame.com or www.dataadvent.com for more details.